The Rise of Privacy by Design

6 April 2023 - Gintare Venzlauskaite

Awareness and concerns are rising regarding personal privacy in the digital world. We can see it from the growing number of data protection and privacy laws across the globe, penalties issued to companies breaching existing regulations, awareness by users and consumers concerned with (ab)uses of their data, development of privacy technology, as well as demand for privacy professionals. Therefore, it is not surprising that weeks preceding and following this year’s Data Privacy Day saw discussions revolving around the trends driving change, the gaps in policies and deceptive user-facing design practices, as well as recommendations to companies and organisations to act upon various aspects of privacy as it becomes ever more important factor to secure consumer trust. With that in mind, we take a look at the developments that have occurred recently in this area and point out key considerations relevant to organisations and UX professionals.

The Issue of Data Privacy

Over the last few years, personal data and privacy have been steadily gathering more attention in private, professional, and corporate spheres. This is partially a result of the rollout of data protection laws, such as GDPR in Europe, and similar initiatives elsewhere, including in some US states like California and Vermont. And there are even more changes in the making(this will open in a new window) globally. However, the awareness has also been fuelled by multiple scandals, such as Cambridge Analytica, and growing number of lawsuits against companies(this will open in a new window) such as Google, Meta/Facebook(this will open in a new window), Amazon, Epic games(this will open in a new window) and others who have failed to follow relevant regulations. These failings include a lack of clarity and accessibility in their privacy policies and settings, trading users’ data or making it difficult to opt-out, not securing sensitive data, and employing deceptive design practices that cause users to share more data than intended.

From the user (or data subject) perspective, the common viewpoint of ‘I have nothing to hide’ or ‘I’m not that interesting to be worried’ has also been shifting towards increasing collective awareness of the monetary value of our digital footprint for companies and data brokers. The many activities we engage in online are tracked, scooped up, pooled together and then used for commercial and political advantage without explanation or informed consent, bringing potentially significant consequences for us as individuals and societies at large.

While 10-20 years ago consumers rarely voiced their considerations regarding data collection, a survey run by KMPG in 2021 revealed that 86% of respondents reported growing concerns about their data privacy, with 40% not trusting companies to handle their data ethically (Lance 2021). This reflects a growing wariness of information sharing, as people consider how to reduce exposure to their data being harvested.

These mounting concerns are prompting a further push to improve data and privacy protection regulations, new professional community recommendations promoting privacy by design(this will open in a new window), as well as privacy-forward solutions that seek to secure user privacy and, by extension, gain consumer trust. At the same time however, as Harry Brignull(this will open in a new window) (known for defining the concept of deceptive design) acknowledges, the fact that businesses are doing their best to make money, means that loopholes in laws are likely to be exploited – including in form of deceptive design.

This could be a short-sighted strategy, however. Marketing specialist and writer Jonathan Joseph at Ketch invites businesses to rethink their approach, instead making privacy a core value(this will open in a new window) to secure customer trust. He quotes research on consumer perspectives on privacy conducted in the US that showed that 74% of respondents ranked data privacy among their top values and concerns. This is a result that sits significantly higher than, for instance, customers’ concern with businesses’ impact on the environment. In addition, Brignull’s own work(this will open in a new window) and that of many others is a testament to the growing concern and user discontent with companies who resort to practices of trickery in design or privacy washing(this will open in a new window).

It is therefore unsurprising that there are increasing show of recommendations from institutions(this will open in a new window), business reporting(this will open in a new window) and consultants(this will open in a new window) for enterprises and organisations to not only avoid being complicit in shady personal data practices or just ticking compliance boxes, but also to go extra mile to secure digital trust. In words of a lawyer, researcher, and content creator Luiza Jarovsky(this will open in a new window), ‘users no longer tolerate being tricked and expect companies to treat them fairly’. Failing to be fair and transparent can result in reputational damage for organisations. Therefore, as prompted by Microsoft’s Privacy Officer Julie Brill(this will open in a new window), it is important for companies to have a well-rounded future-proof privacy strategy recognisable by indented audiences, be they users, customers, clients or stakeholders.

The Challenges of Privacy by Design and What It Means for UX professionals

The developments in privacy are so dynamic that the International Association of Privacy Professionals pronounced 2023 as ‘The year of more’ with predictions of significant changes in legislature at state and global levels, a growth in privacy technology, and increased demand for privacy professionals. As pointed out by ISACA report (2023, p.4)(this will open in a new window), ‘the demand for privacy professionals is expected to increase over the next year for technical privacy professionals and legal/compliance privacy professionals’. However, the understaffing issue has improved in comparison to the last year, suggesting a positively changing attitude towards privacy by enterprises. In the context of these rapid changes in the privacy landscape, the recommended considerations from relevant professional organisations(this will open in a new window) and privacy professionals(this will open in a new window) for companies also include making privacy teams more cohesive and other cross-company teams more privacy-aware by the introduction or increase of regular training.

While many companies are already investing more in their privacy practices and hiring relevant professionals, they are mostly legal and technical staff (lawyers and engineers) who are responsible for interpretation of the relevant laws and implementing necessary changes across the systems. However, this does not always extend to end user-facing aspects of privacy, including UI and/or UX teams.

The UX field prides itself in its human-centred philosophy and commitment to serve user needs through an optimal experience. However, when balancing between business needs and user needs in data-driven world, user data can fall victim to either unconscious neglect or conscious strategic goals, which can lead teams into the grey area of deceptive design practices. Unfortunately, when business needs take away from user needs, there is a heightened risk of impact on the positive user and human experience, privacy being a prime example. This has been demonstrated by many reported unsavoury cases of targeted advertising. For example, in the online gambling industry exploiting vulnerabilities of their customers as revealed by Wolfie Christl’s research(this will open in a new window), or concerns(this will open in a new window) of possible targeting of data-profiled users of reproductive health tracking apps following the ruling to overturn Roe v. Wade by the US Supreme Court in June 2022).

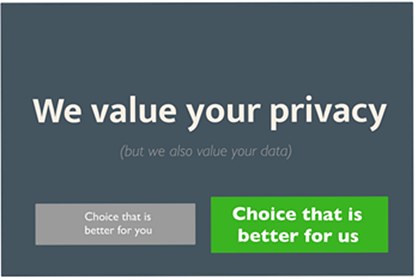

As users, we are all familiar with cookie banners that make us work a little bit harder to refuse being tracked via cookies, even though we barely understand how they “crumble” down the line. Privacy and security settings can also be a minefield, often lacking plain language and explanations of the purposes and uses of user data. Reaching out to privacy teams often results in long waits and few answers of how their privacy policy can be easily deciphered into “human language”. And even though many websites and apps have stepped up in this regard, especially in giving more controls to users willing to customise their choices of sharing and tracking, more needs to be done to provide users with information, choice, control, and an overall understanding of how much of their data is collected and how it is handled.

Admittedly, it is difficult to blame designers or content writers for lack of privacy considerations in their work when there is little guidance on how data protection and privacy laws translate into practical applications in user-facing versions of products and services. As demonstrated by Lei Nelissen and Mathias Funk’s research (2022)(this will open in a new window), designers are often left to their own devices. As such, decisions are based on their personal judgement, awareness, and knowledge. Moreover, depending on the company they work for, pushing a privacy-friendly agenda might not be welcomed or possible. The authors describe this as a significant gap between laws and actionable guidelines, which unfortunately often implicates designers in proliferating deceptive design practice. This in turn leaves users with choices that they might not make if given more clearly formulated options.

The Developments in Sight

Notably, the awareness and desire to fill the gap between policy and practice is out there already. The changes are noticeable from within the communities of practice, which are introducing and adopting ideas of designs avoidant of deceptive designs(this will open in a new window) geared towards the promotion of data collection and oversharing, such as Confirm-shaming(this will open in a new window), Privacy zuckering(this will open in a new window), Forced registration(this will open in a new window) or Roach motel(this will open in a new window). Examples include Decepticon project(this will open in a new window) aiming to create platform for sharing and creating know-how of manipulative and deceptive patterns, decisional interference, as well as providing relevant research and resources. Also, a recently launched FairPatterns(this will open in a new window) website offering assessment services, off-the-shelf solutions, and training for avoidance of deceptive design. Privacy professionals and advocates are also promoting Privacy UX, offering possible guidance for consideration. For example, Luiza Jarovsky invites us to consider the application of Privacy-Enhancing Design(this will open in a new window) – a list of heuristics intended to guide practical implementation of data protection rules.

At an institutional level, we see more advice coming as well. For example, in January 2023, the European Data Protection Board published a Draft Report of the work undertaken by the Cookie Banner Taskforce(this will open in a new window), which features opinions about such practices as no reject button in the first layer, pre-ticked boxes, deceptive button contrast or claimed legitimate interest in the list of purposes. This document adds to various other EU reports concerned with prevalence of deceptive design across platforms of all sizes. One example is study by Directorate-General for Justice and Consumers(this will open in a new window). Finally, the International Organisation for Standardisation (ISO) announced the adoption of the Privacy-by-Design standard (31700)(this will open in a new window) which invites organisations and professionals to default to privacy-preserving principles and guides them along various stages of a product/service’s data cycle.

Going hand-in-hand, various privacy solutions are picking up as well, starting with a range of privacy-enhancing technologies (PETs) and off-the-shelf products and services for businesses, including privacy training, deceptive design audits, pre-designed privacy-friendly interfaces, etc. This extends to privacy-friendly products and practices for users (customisation of privacy settings, opting tracking-out, opting for privacy-defaulting apps, browsers, file managers, etc.), as well as future-focused innovations aiming to shift the way we think about and use our data (see, for example, Prifina(this will open in a new window) who are working towards a solution allowing people to own and utilise their data themselves). All of this mirrors the point by Jaan-Henk Hoepman who in his book Privacy is hard and seven other myths (2021, p. 63) says that ‘technology is not only part of the problem, but also an essential part of solution’.

Conclusion

Collectively, the developments covered in this article represent a movement that is picking up pace and is unlikely to slow down. While innovation or regulation approaches will respectively impact developments in data privacy laws and practices, in the countries and regions that align themselves with human rights considerations we are likely to witness growing consumer expectations and/or legal requirements for privacy maturity in companies and organisations.

However, there is still a long way to go in terms of reclaiming privacy as an individual value, seeing it as a collective democracy-preserving value, and embracing it as a trust-building business value. Turning the tide is a complex endeavour. It requires challenging the sharp power asymmetries between data subjects and data-utilising market actors. It also means enabling enterprises and organisations willing to be part of the privacy-forward changes to shift data economy towards alternative set of practices. Finally, it cannot be done without equipping practitioners with knowledge and creative space to encourage designing fairer, more transparent, and accessible tools and solutions.

What do you think?

What the situation and practices are like in your organisation and what can you do to stay in-sync with current developments, including your own role? Do you acknowledge that privacy is part of human experience and prioritise it in your own digital life? How about your organisation? What does it do to gain and maintain user trust? Are you proactive in seeking and sharing privacy and data security awareness or actionable solutions (no matter how little) with your circles? Do you or your organisation recognise that privacy should be met as an equal requirement to others throughout a system or product’s life cycle and is therefore a condition necessary for practicing privacy by design?

We believe that privacy-friendly UX is the right way to go and acknowledge the pivotal role that UX professionals will play in the development, deployment, and advocacy for privacy by design and default. User Vision is following the developments in this area, and incorporating Privacy by Design principles into the design advice we offer clients.

If you would like to discuss how we can help you deliver a trusted user experience, please get in touch at: hello@uservision.co.uk

Download this article

You can download a copy of this report, including a list of references and resources from: https://uservision.egnyte.com/dl/tJSpm1nvlt(this will open in a new window) (password: privacybydesign)

You might also be interested in...

Understanding Accessibility Drift - And How to Prevent It

12 February 2026Why accessibility standards erode after audits, and what continuous monitoring can do about it.

Read the article: Understanding Accessibility Drift - And How to Prevent ItAccessibility Toolkit - Access360 Managed Service

5 January 2026Continuous WCAG monitoring, expert audits, and capability building to maintain sustainable digital accessibility .

Read the article: Accessibility Toolkit - Access360 Managed ServiceBridging Business Analysis and User Experience: Achieve Outstanding Digital Results

24 November 2025Discover how aligning Business Analysis and User Experience transforms digital projects - boosting efficiency, user satisfaction, and ROI for organisations seeking exceptional results in today’s competitive market.

Read the article: Bridging Business Analysis and User Experience: Achieve Outstanding Digital Results